Are you ready to level up your video creation game with AI? Wan 2.2 is making cinematic, professional-grade video generation more accessible than ever. In this guide, we'll walk you through everything about prompt engineering and how to use Wan 2.2 to producing stunning videos. Let's dive in together!

In This Article

01 What is Wan 2.2?

Wan 2.2 is an advanced open-source AI video generation model that has quickly become a favorite among creators and developers alike. Developed with cinematic and professional use in mind, Wan 2.2 stands out for its ability to produce high-quality videos from widely varying prompts, textures, and inputs. Whether you're a filmmaker seeking to prototype scenes or a content creator looking to accelerate your workflow, Wan 2.2 offers both power and flexibility. It supports both 480p, 720p and even 1080p outputs at 24fps, ensuring a broad range of applications from social media content to higher-end animations.

Key Features and Advantages

- Cinematic Aesthetic Control: This model deeply integrates film-industry-standard aesthetics, providing users with nuanced control over lighting, color, composition, and more.

- Dual-Resolution Output: Choose between 480p and 1080p at 24 frames per second, optimizing for either speed or visual fidelity.

- Efficient Design: Under-the-hood advancements make Wan 2.2 surprisingly efficient—whether on local hardware or in the cloud, users can expect smooth performance and relatively low resource demands.

- Open Source and Versatile: With various models (including a compact 5B parameter dense model and a powerful 27B MoE version), Wan 2.2 can be adapted to different deployment scenarios, from laptops to scalable cloud clusters.

02 How to Write Effective Prompts for Wan 2.2

1. Why Prompt Engineering Matters in Video Generation

Prompt engineering is at the core of AI video generation's power and flexibility. The creative outcome hinges heavily on how well you define your intentions with each prompt. A precise, vivid prompt can guide the model to produce stunning, cinematic visuals; a vague or underspecified one may yield confusing or lackluster results. Mastering this "language of instruction" is critical to unlocking Wan 2.2's true potential.

2. Basic Prompt Structure for Wan 2.2

Strong prompts often utilize the following elements:

- Subject: What do you want to see? Specify the character, object, or scene.

- Action: What's happening? Is there movement, transformation, or a particular activity?

- Style and Aesthetic: Describe the mood, colors, atmosphere, or genre.

- Environment/Background: Where is your subject? Day or night, indoors or outdoors?

- Camera Details (Optional): Include details like close-up, wide shot, or camera movement for even more control.

Example Prompt: "A teenage girl with flowing hair running through a golden wheat field at sunset, soft cinematic lighting, shot in slow motion."

3. Advanced Prompting Techniques

- Genre and Reference Integration: Specify genres (e.g., cyberpunk, noir) or artistic inspirations.

- Storyboarding with Multiple Scenes: Chain prompts to create coherent multi-shot sequences.

- Instructional Layering: Add parameters about color grading, lighting style (e.g., "backlit", "neon glow"), and camera gear to push fidelity.

- Hybrid Inputs: Use an image as a reference for style or composition in addition to textual prompts for a more targeted result.

4. Common Pitfalls and Troubleshooting

- Overly Vague Prompts: These often yield generic or off-target outputs. Always add detail where possible.

- Overspecification: Too many conflicting or dense instructions may confuse the model. Find a balance.

- Artifacts and Unintended Elements: If results show odd visuals, try simplifying or rewording the prompt, or clear up ambiguities.

03 How to Use Wan 2.2 Step by Step (4 Methods)

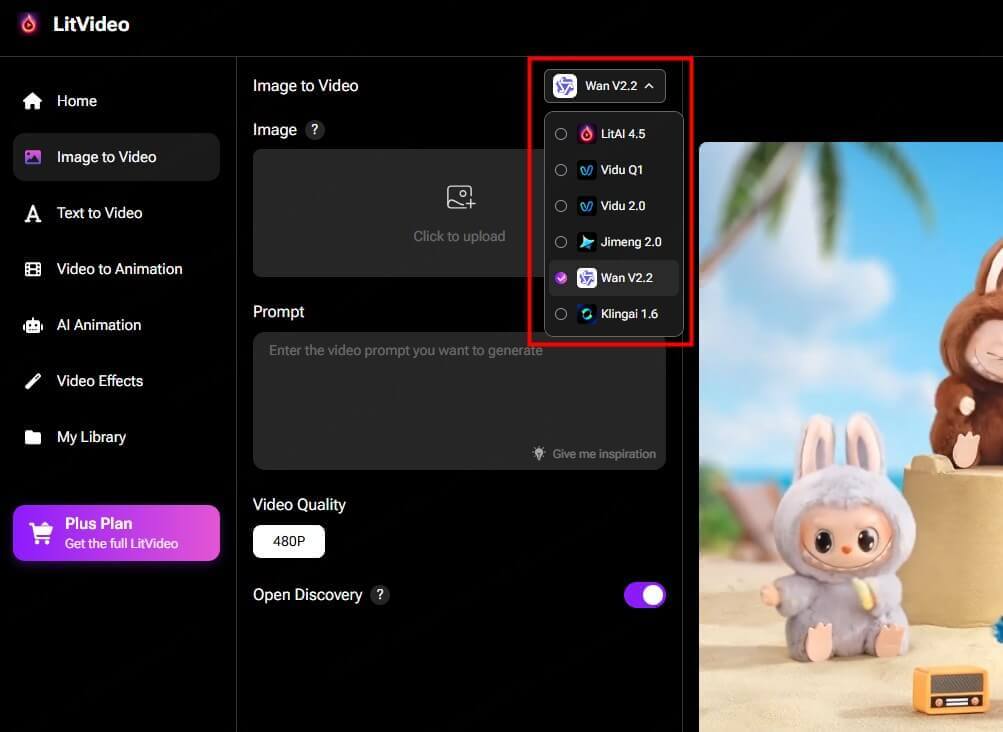

1. Using Cloud-based Online Tools (LitVideo)

For those who want to avoid local setup or want to compare multiple models, LitVideo is the go-to online platform. LitVideo aggregates powerful video models—including Wan 2.2, Vidu Q1, Kling AI, and more—offering video creators an efficient and cost-effective solution.

How to use Wan 2.2 on LitVideo:

LitVideo's model collection and unified interface make it ideal for creators who want to maximize flexibility without investing in powerful local hardware.

2. Local Deployment and Usage

Power users and those seeking privacy or maximum customization may prefer running Wan 2.2 locally.

Steps for Local Deployment:

- System Requirements: Ensure your system matches the hardware needs (GPU with enough VRAM for the selected model variant: 5B or 27B).

- Download and Install: Clone the official Wan 2.2 repository (usually via GitHub). Set up any required dependencies (Python environment, specific libraries).

- Model Weights: Download pre-trained weights, as specified in the official docs.

- Run Locally: Use the provided command-line scripts, or employ a local GUI if available. Feed in prompts and process results on your machine.

- Resource Management: Monitor GPU usage and optimize parameters (resolution, batch size) for the best efficiency.

3. Accessing via API

For seamless software integration, you can utilize Wan 2.2's API access. This is perfect for app developers, automation workflows, or businesses needing to generate videos programmatically.

How to Use the API:

- Register for Access: Get your API key from platforms providing Wan 2.2 service.

- Integrate: Use the endpoint (usually RESTful) to send your prompts and receive generated content.

- Example Request (Python):

import requests

headers = {"Authorization": "Bearer YOUR_API_KEY"}

data = {"prompt": "A neon-lit cityscape at night, rain falling", "resolution": "720p"}

response = requests.post("https://api.wan2.2provider.com/generate", headers=headers, json=data)

video_bytes = response.content- Automation: Fit this into web apps, creative tools, or media pipelines with minimal effort.

4. Integration with Visual Workflow Tools (e.g., ComfyUI)

Non-coders and visual thinkers can adopt tools like ComfyUI to streamline AI video workflows.

How to Use Wan 2.2 with Visual Tools:

- Install Workflow Tool: Download and install ComfyUI, ensuring compatibility with your local Wan 2.2 install.

- Add Wan 2.2 Nodes: Integrate model-specific "nodes" or plugins within the workflow interface.

- Drag, Drop, and Tune: Build a pipeline visually—setting input sources, prompts, output destinations, and parameter controls without writing code.

- Experiment Visually: Adjust prompt components, batch settings, or model parameters in real time, fostering rapid experimentation and iteration.

Conclusion

Wan 2.2 represents the cutting edge of open AI video generation, combining cinematic control with scalable, accessible deployment options. From crafting effective prompts to selecting your ideal workflow—whether through cloud tools like LitVideo, local deployment, API integration, or user-friendly visual frameworks—you can go from an idea to a professional-grade video with ease. Take the time to experiment with your prompts and preferred workflow, and you'll unlock a world of creative possibilities powered by the next generation of AI video.